This lecture discusses mean-square convergence, first for sequences of random variables and then for sequences of random vectors.

Table of contents

As explained previously, different definitions of convergence are based on different ways of measuring how similar to each other two random variables are.

The definition of mean-square convergence is based on the following intuition: two random variables are similar to each other if the square of their difference is small on average.

Remember that a random variable is a mapping from a sample space (e.g., the set of possible outcomes of a coin-flipping experiment) to the set of real numbers (e.g., the winnings from betting on tails).

Let and be random variables defined on the same sample space .

For a fixed sample point , the squared difference between the two realizations of and provides a measure of how different those two realizations are.

The mean squared difference quantifies how different the two realizations are on average (as varies).

It is a measure of the "distance" between the two variables. In technical terms, it is called a metric.

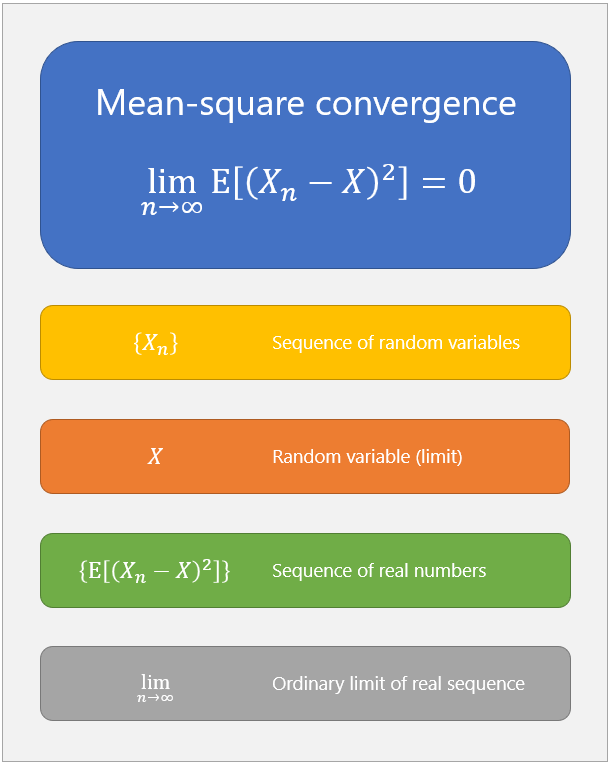

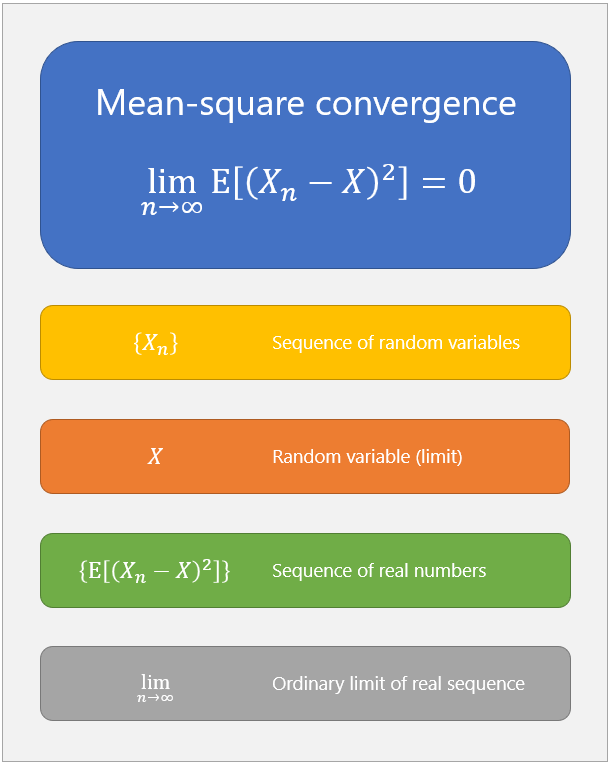

Intuitively, if a sequence converges to , the mean squared difference should become smaller and smaller by increasing .

In other words, the sequence of real numbers should converge to zero.

Requiring that a sequence of distances tends to zero is a standard criterion for convergence in a metric space.

This kind of convergence analysis can be carried out only if the expected values of and are well-defined and finite.

In technical terms, we say that and are required to be square integrable.

The considerations above lead us to define mean-square convergence as follows.

Definition Let be a sequence of square integrable random variables defined on a sample space . We say that is mean-square convergent (or convergent in mean-square) if and only if there exists a square integrable random variable such that

The variable is called the mean-square limit of the sequence and convergence is indicated by or by

The notation indicates that convergence is in the Lp space (the space of square integrable functions).

The following example illustrates the concept of mean-square convergence.

Let be a covariance stationary sequence of random variables such that all the random variables in the sequence have:

Define the sample mean as follows: and define a constant random variable .

![]()

The distance between a generic term of the sequence and is

![[eq15]](https://www.statlect.com/images/mean-square-convergence__40.png)

But is equal to the expected value of because Therefore, by the very definition of variance.

![[eq17]](https://www.statlect.com/images/mean-square-convergence__43.png)

In turn, the variance of is

But this is just the definition of mean square convergence of to .

Therefore, the sequence converges in mean-square to the constant random variable .

The above notion of convergence generalizes to sequences of random vectors in a straightforward manner.

Let be a sequence of random vectors defined on a sample space , where each random vector has dimension .

![[eq24]](https://www.statlect.com/images/mean-square-convergence__58.png)

The sequence of random vectors is said to converge to a random vector in mean-square if converges to according to the metric where is the Euclidean norm of the difference between and and the second subscript is used to indicate the individual components of the vectors and .

The distance is well-defined only if the expected value on the right-hand side exists. A sufficient condition for its existence is that all the components of and be square integrable random variables.

Intuitively, for a fixed sample point , the square of the Euclidean norm provides a measure of the distance between two realizations of and .

The mean provides a measure of how different those two realizations are on average (as varies).

If the distance becomes smaller and smaller by increasing , then the sequence of random vectors converges to the vector .

The following definition formalizes what we have just said.

Definition Let be a sequence of random vectors defined on a sample space , whose entries are square integrable random variables. We say that is mean-square convergent if and only if there exists a random vector with square integrable entries such that

Again, is called the mean-square limit of the sequence and convergence is indicated by or by

A sequence of random vectors is convergent in mean-square if and only if all the sequences of entries of the random vectors are.

Proposition Let be a sequence of random vectors defined on a sample space , such that their entries are square integrable random variables. Denote by the sequence of random variables obtained by taking the -th entry of each random vector . The sequence converges in mean-square to the random vector if and only if converges in mean-square to the random variable (the -th entry of ) for each .

Below you can find some exercises with explained solutions.

Let be a random variable having a uniform distribution on the interval .

In other words, is a continuous random variable with support and probability density function

Consider a sequence of random variables whose generic term is where is the indicator function of the event .

Find the mean-square limit (if it exists) of the sequence .

![[eq53]](https://www.statlect.com/images/mean-square-convergence__117.png)

When tends to infinity, the interval becomes similar to the interval because Therefore, we conjecture that the indicators converge in mean-square to the indicator . But is always equal to , so our conjecture is that the sequence converges in mean square to . To verify our conjecture, we need to verify that The expected value can be computed as follows. Thus, the sequence converges in mean-square to because

![[eq57]](https://www.statlect.com/images/mean-square-convergence__123.png)

Let the probability mass function of a generic term of the sequence be

Find the mean-square limit (if it exists) of the sequence .

![[eq62]](https://www.statlect.com/images/mean-square-convergence__131.png)

Note that Therefore, one would expect that the sequence converges to the constant random variable . However, the sequence does not converge in mean-square to . The distance of a generic term of the sequence from is Thus, while, if was convergent, we would have

Does the sequence in the previous exercise converge in probability?

![[eq67]](https://www.statlect.com/images/mean-square-convergence__138.png)

The sequence converges in probability to the constant random variable because, for any , we have that

Taboga, Marco (2021). "Mean-square convergence", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/asymptotic-theory/mean-square-convergence.

Most of the learning materials found on this website are now available in a traditional textbook format.

Featured pages